Secure, safe and fair machine learning for healthcare

Coordinating Partners : Jamal Atif and Aurélien Bellet

Coordinating institution : Paris Sciences et Lettres University (PSL)

Machine learning, massive health data, trust, cybersecurity, federated learning, confidentiality, robustness, fairness

The healthcare sector (public and private) generates a vast amount of data from various sources, including electronic health records, advanced imaging techniques, high throughput sequencing, wearable devices, and population health data. The use of massive datasets, or “big data,” analyzed using sophisticated machine learning algorithms, has the potential to inform the development of more effective and personalized treatments, interventions, and policies, and to improve healthcare delivery and outcomes.However, the sensitive nature of personal health data, cybersecurity risks, biases in the data, and the lack of robustness of machine learning algorithms are all factors that currently limit the widespread use and exploitation of this data. These limitations thus hinder the potential benefits that can be obtained from massive health data analysis for the individuals and society.

Health data usage is governed by a complex and extensive set of ethical and legal requirements. Ensuring the security of data, regardless of its nature and how it is transmitted, processed, and transformed, is essential. At the same time, the methods used to analyze and utilize this data must also be secure, fair, and robust. This is particularly important in the face of the growing number of cyber-attacks on the healthcare sector mostly driven by the personal, economic, and innovative value of medical data and their processing.

The goal of this project is to overcome the challenges that prevent the effective use of personalized health data. To achieve this, we will develop new machine learning algorithms that are designed to handle the unique characteristics of multi-scale and heterogeneous individual health data, while providing formal privacy guarantees, robustness against adversarial attacks and changes in data dynamics, and fairness for under-represented populations. By addressing these barriers, we hope to unlock the full potential of personalized health data for a wide range of applications.

More precisely, the project will address the following scientific challenges:

- privacy-preserving learning: new results in differential privacy and homomorphic encryption;

- federated vs centralized learning: new methods and trade-off accuracy/privacy;

- Robustness: data bias, non-stationarity, model drift, data shift, domain adaptation, new attacks, new defenses;

- Machine un-learning or the right to be forgotten.

The project brings together a consortium of established researchers with expertise in machine learning, statistics, privacy, and robustness and biomedical applications, and a clear commitment to unlock the full potential of machine learning for healthcare applications. Its innovative character lies in its ability to mobilize such a unique community of researchers. Moreover, the project is positioned between two PEPRs (Cybersecurity and Digital Health), giving it a particular character of dissemination of knowledge and practices between research communities that have had little place for interaction so far.

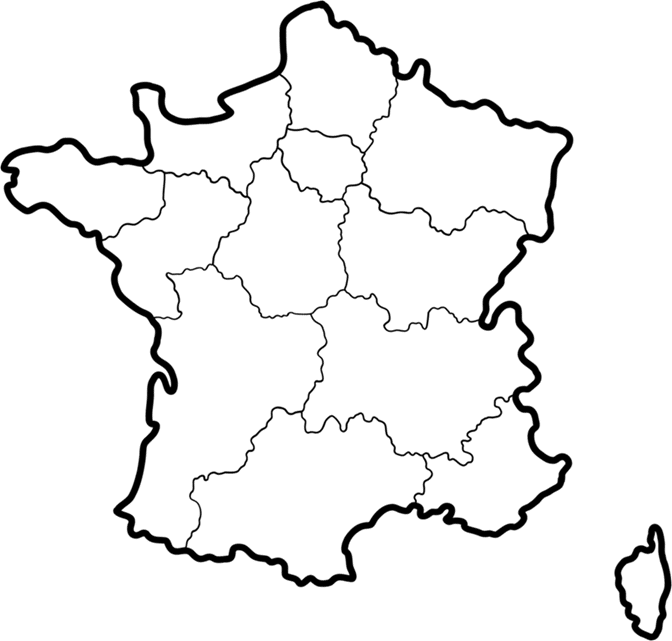

Centre Inria d’Université Côte d’Azur – Eq Inria PREMEDICAL

| Laboratory or department, team | Supervisors |

| LAMSADE – UMR7243 | CNRS, Paris-Dauphine-PSL University |

| LaTIM – U 1101 – Eq Cyber Health | Inserm, Bretagne Occidentale University, IMT Atlantique

CHU Brest partner |

| CRIStAL UMR 9189 – Eq Inria Magnet | CNRS, Inria, Université de Lille, Central Lille institut,

Mines Télécom partenaire |

| Centre Inria d’Université Côte d’Azur – Eq Inria PREMEDICAL | Inria, INSERM, Université de Montpellier |

| CEA LIST – Dpt DIN et DSCIN | CEA, Paris-Saclay University |

| DI-ENS – UMR 8548 | CNRS, ENS, PSL, Inria |

| Inria Sophia Antipolis, Eq EPIONE | Inria, Côte d’Azur University |

| CITI Lab – Eq Inria Privatics | INSA, Inria Grenoble, Inria Lyon, Lyon University |